Get Started#

Time to Complete: 30 minutes

Programming Language: Python 3

Configure Docker#

To configure Docker:

Run Docker as Non-Root: Follow the steps in Manage Docker as a non-root user.

Configure Proxy (if required):

Set up proxy settings for Docker client and containers as described in Docker Proxy Configuration.

Example

~/.docker/config.json:{ "proxies": { "default": { "httpProxy": "http://<proxy_server>:<proxy_port>", "httpsProxy": "http://<proxy_server>:<proxy_port>", "noProxy": "127.0.0.1,localhost" } } }

Configure the Docker daemon proxy as per Systemd Unit File.

Enable Log Rotation:

Add the following configuration to

/etc/docker/daemon.json:{ "log-driver": "json-file", "log-opts": { "max-size": "10m", "max-file": "5" } }

Reload and restart Docker:

sudo systemctl daemon-reload sudo systemctl restart docker

Clone source code#

git clone https://github.com/open-edge-platform/edge-ai-suites.git

cd edge-ai-suites/manufacturing-ai-suite/industrial-edge-insights-multimodal

Deploy with Docker Compose#

Update the following fields in

.env.INFLUXDB_USERNAMEINFLUXDB_PASSWORDVISUALIZER_GRAFANA_USERVISUALIZER_GRAFANA_PASSWORDMTX_WEBRTCICESERVERS2_0_USERNAMEMTX_WEBRTCICESERVERS2_0_PASSWORDHOST_IP

Deploy the sample app, use only one of the following options.

NOTE:

The below

make upfails if the above required fields are not populated as per the rules called out in.envfile.The sample app is deployed by pulling the pre-built container images of the sample app from the docker hub OR from the internal container registry (login to the docker registry from cli and configure

DOCKER_REGISTRYenv variable in.envfile atedge-ai-suites/manufacturing-ai-suite/industrial-edge-insights-multimodal)The

CONTINUOUS_SIMULATOR_INGESTIONvariable in the.envfile (for Docker Compose) is set totrueby default, enabling continuous looping of simulator data. To ingest the simulator data only once (without looping), set this variable tofalse.The update rate of the graph and table may lag by a few seconds and might not perfectly align with the video stream, since Grafana’s minimum refresh interval is 5 seconds.

The graph and table may initially display “No Data” because the Time Series Analytics Microservice requires some time to install its dependency packages before it can start running.

cd <PATH_TO_REPO>/edge-ai-suites/manufacturing-ai-suite/industrial-edge-insights-multimodal make up

Use the following command to verify that all containers are active and error-free.

Note: The command

make statusmay show errors in containers like ia-grafana when user have not logged in for the first login OR due to session timeout. Just login again in Grafana and functionality wise if things are working, then ignoreuser token not founderrors along with other minor errors which may show up in Grafana logs.cd <PATH_TO_REPO>/edge-ai-suites/manufacturing-ai-suite/industrial-edge-insights-multimodal make status

Verify the Weld Defect Detection Results#

Get into the InfluxDB* container.

Note: Use

kubectl exec -it <influxdb-pod-name> -n <namespace> -- /bin/bashfor the helm deployment where forreplace with namespace name where the application was deployed and for replace with InfluxDB pod name.

docker exec -it ia-influxdb bash

Run following commands to see the data in InfluxDB*.

NOTE: Please ignore the error message

There was an error writing history file: open /.influx_history: read-only file systemhappening in the InfluxDB shell. This does not affect any functionality while working with the InfluxDB commands# For below command, the INFLUXDB_USERNAME and INFLUXDB_PASSWORD needs to be fetched from `.env` file influx -username <username> -password <passwd> use datain # database access show measurements # Run below query to check and output measurement processed # by Time Series Analytics microservice select * from "weld-sensor-anomaly-data" # Run below query to check and output measurement processed # by DL Streamer pipeline server microservice select * from "vision-weld-classification-results"

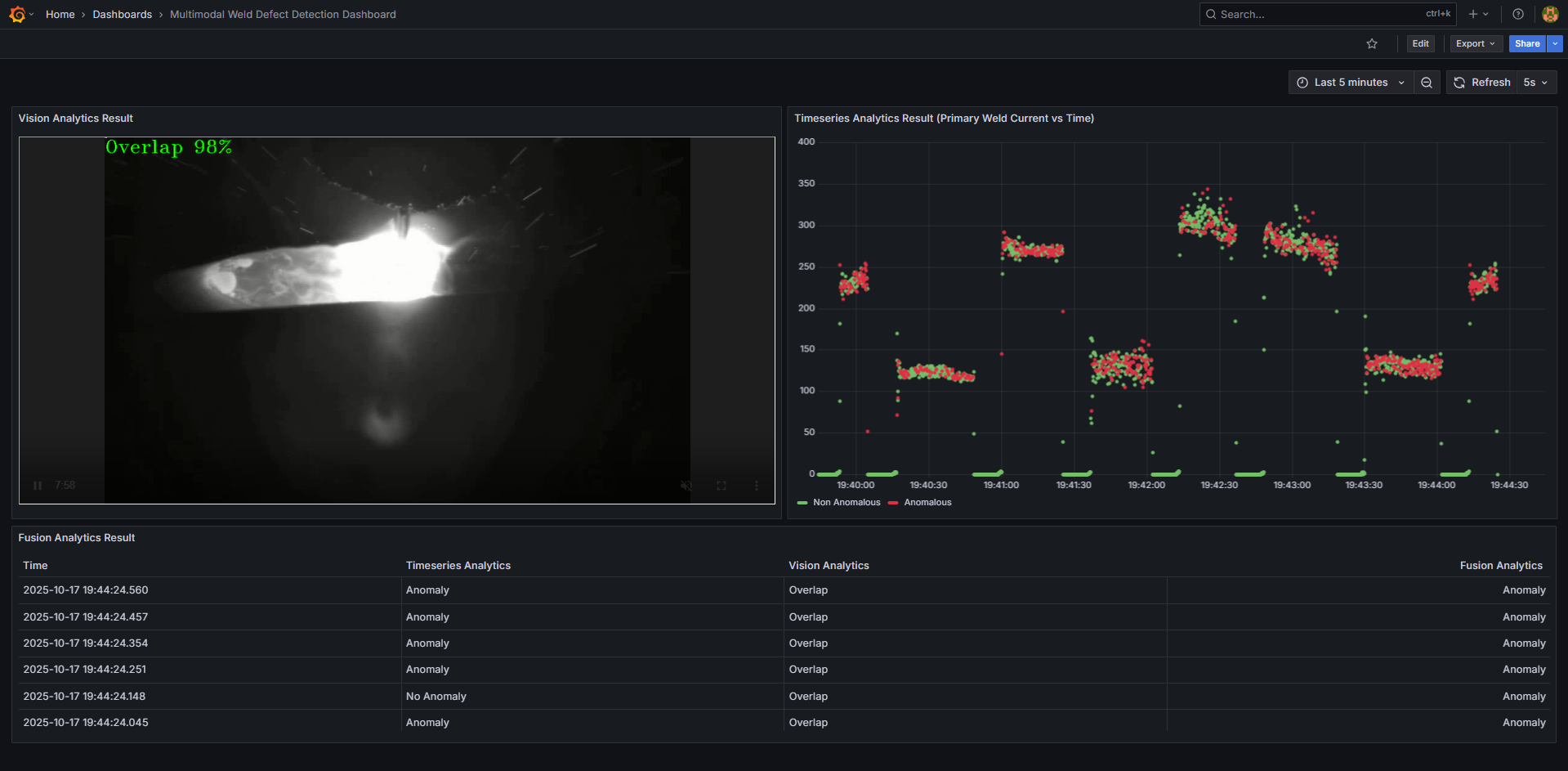

Check the output in Grafana.

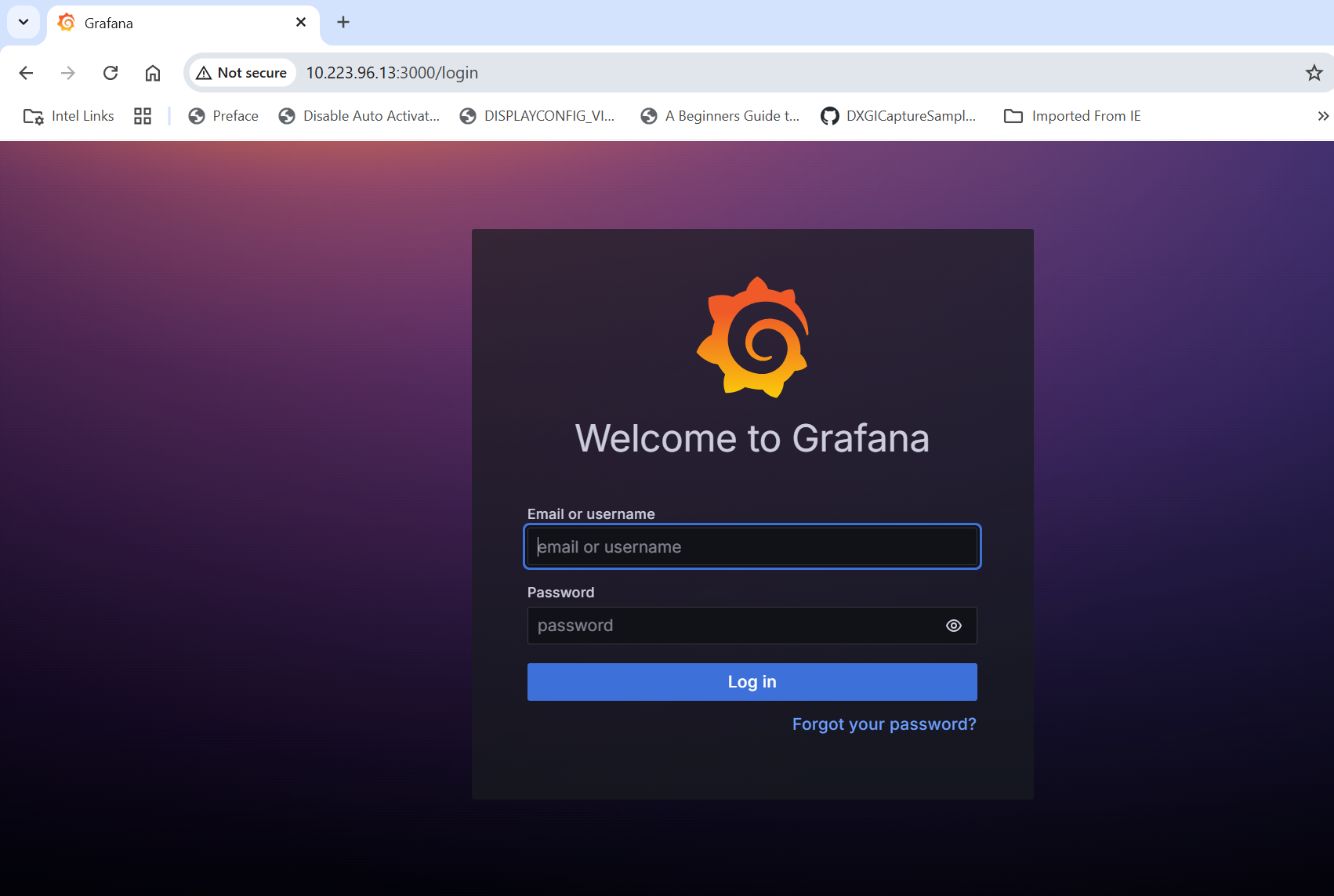

Use link

https://<host_ip>:3000to launch Grafana from browser (preferably, chrome browser)Login to the Grafana with values set for

VISUALIZER_GRAFANA_USERandVISUALIZER_GRAFANA_PASSWORDin.envfile and select Multimodal Weld Defect Detection Dashboard.

After login, click on Dashboard

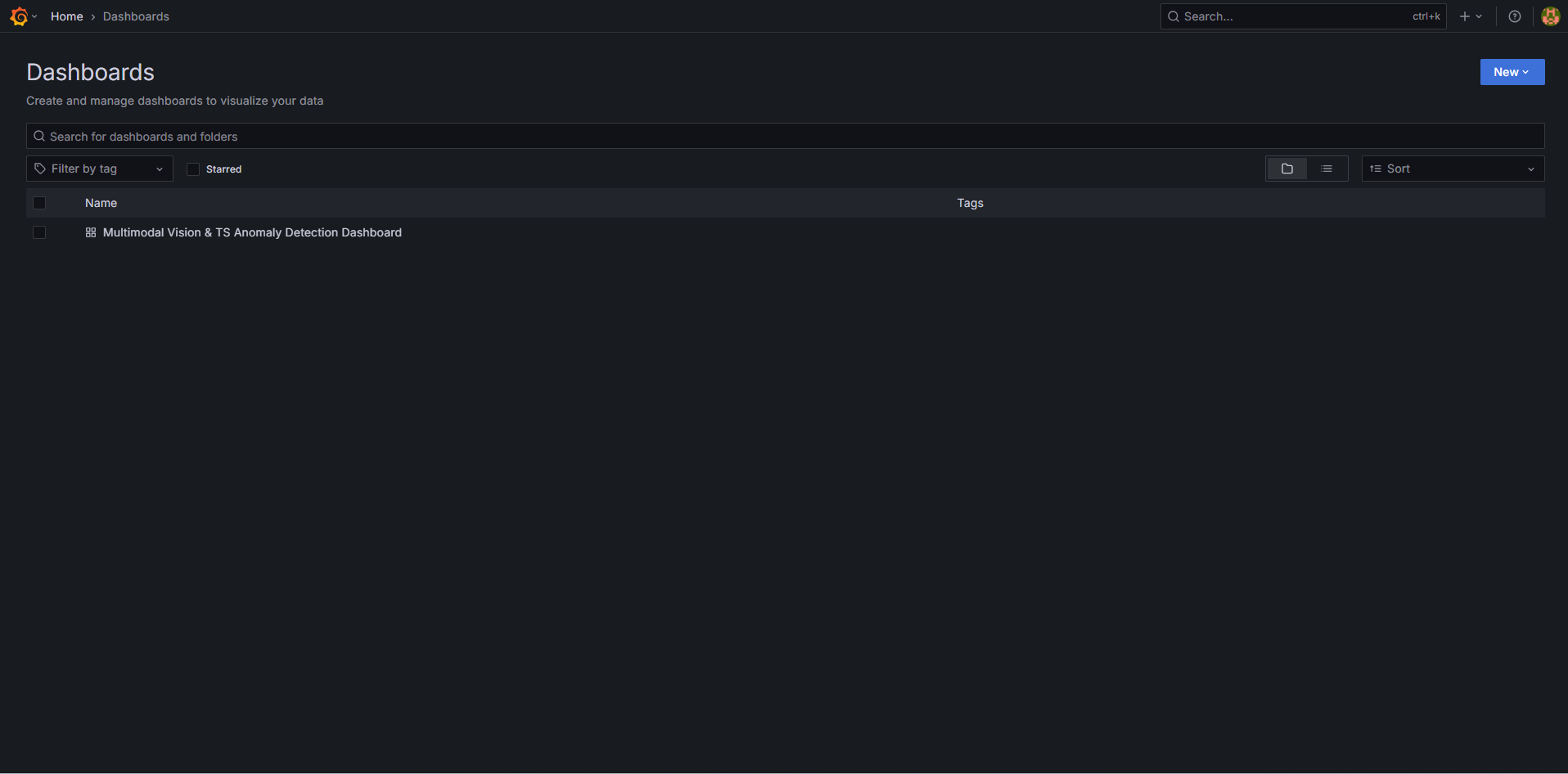

Select the

Multimodal Weld Defect Detection Dashboard.

One will see the below output.

Bring down the sample app#

cd <PATH_TO_REPO>/edge-ai-suites/manufacturing-ai-suite/industrial-edge-insights-multimodal

make down

Check logs - troubleshooting#

Check the container logs to catch any failures:

docker ps

docker logs -f <container_name>

docker logs -f <container_name> | grep -i error

Advanced setup#

How to build from source and deploy: Guide to build from source and docker compose deployment

How to deploy with Helm: Guide for deploying with Helm.

How to configure MQTT alerts: Guide for configuring the MQTT alerts in the Time Series Analytics microservice

How to update config: Guide updating configuration of Time Series Analytics Microservice.