Model Preparation & Conversion#

For optimizing and deploying AI models efficiently on Intel hardware — including CPUs, GPUs, VPUs, and FPGAs — which are commonly used in edge computing environments, Open Edge Platform relies heavily on OpenVINO™ Toolkit.

OpenVINO (short for Open Visual Inference and Neural Network Optimization), an open-source toolkit from Intel that:

Optimizes trained AI models (from frameworks like PyTorch, TensorFlow, ONNX, etc.)

Deploys them efficiently on Intel edge devices

Maximizes inference speed and resource utilization

Note that OpenVINO is NOT for training, but for running AI models quickly and efficiently once trained.

OpenVINO supports the following model formats: PyTorch, TensorFlow, TensorFlow Lite, ONNX, PaddlePaddle Although PyTorch, TensorFlow, ONNX, PaddlePaddle are great AI frameworks, OpenVINO™ Toolkit can provide additional benefits in case of inference performance on Intel Hardware as it is heavily optimized for this task.

OpenVINO IR Format#

OpenVINO IR is the proprietary model format of OpenVINO, benefiting from the full extent of its features. It is obtained by converting a model from one of the supported formats (PyTorch, TensorFlow, TensorFlow Lite, ONNX, PaddlePaddle) using the model conversion API or OpenVINO Converter. The process translates common deep learning operations of the original network to their counterpart representations in OpenVINO and tunes them with the associated weights and biases. The resulting OpenVINO IR format contains two files:

.xml - Describes the model topology.

.bin - Contains the weights and binary data.

Conversion Benefits#

Saving to IR to improve first inference latency

Saving to IR in FP16 to save space

Saving to IR to avoid large dependencies in inference code

For more information about OpenVINO IR, refer to OpenVINO IR format article.

Model preparation#

Magna culpa minim eiusmod mollit nisi excepteur laboris veniam labore sit id Lorem eu eu. Sunt nisi tempor amet occaecat. Officia ex tempor nisi ut irure elit cupidatat ad aliquip. Elit enim non id eiusmod cupidatat.

Geti#

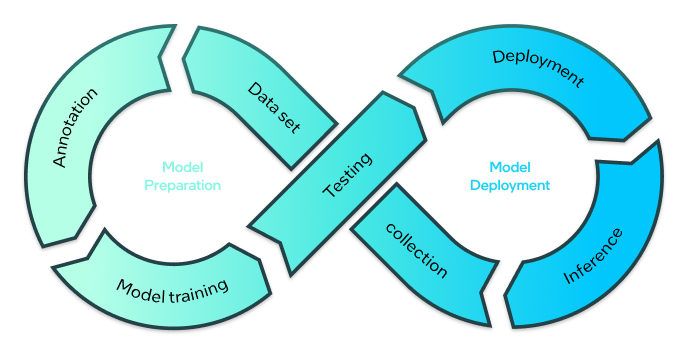

The Geti™ platform enables enterprise teams to rapidly build computer vision AI models. Through an intuitive graphical interface, users add image or video data, make annotations, train, retrain, export, and optimize AI models for deployment. Equipped with state-of-the-art technology such as active learning, task chaining, and smart annotations, the Geti™ platform reduces labor-intensive tasks, enables collaborative model development, and speeds up model creation.

For more information about Intel Geti, please refer to Geti Documentation.

Roboflow, etc.#

Eu culpa do dolor ea. Deserunt ex deserunt do ex tempor. Irure laborum qui eiusmod veniam sunt qui adipisicing. Consequat aliqua fugiat velit exercitation id non irure. Ea excepteur labore nisi deserunt ullamco sunt do dolor mollit laboris ad pariatur.

Model conversion#

Conversion from Open Formats#

AI models are often portable: PyTorch, TensorFlow, ONNX and other frameworks work with OpenVINO out of the box. Below we briefly describe porting options and provide links to helpful resources.

Converting PyTorch models#

PyTorch is an open-source machine learning framework developed primarily by Meta AI. It’s widely used for building and training deep learning models, including neural networks for tasks like computer vision, natural language processing, and generative AI.

PyTorch models can not be directly passed to core.read_model. ov.Model for model objects from this framework can be obtained using ov.convert_model API. Here is the simplest example of PyTorch model conversion using a model from torchvision:

import torchvision

import torch

import openvino as ov

model = torchvision.models.resnet50(weights='DEFAULT')

ov_model = ov.convert_model(model)

For more information, please refer to Converting a PyTorch Model article in OpenVINO documentation and in in pytorch-to-openvino notebook in GitHub.

Converting TensorFlow models#

TensorFlow is an open-source machine learning framework developed by Google for building, training, and deploying AI and deep learning models. It’s one of the most popular tools in the AI world — used for everything from simple linear regression to massive neural networks powering image recognition, speech processing, and large language models.

TensorFlow models saved in frozen graph format can also be passed to read_model. As an example, please download the classification.pb model from our model repository to the model folder

import openvino as ov

core = ov.Core()

tf_model_path = "model/classification.pb"

model_tf = core.read_model(model=tf_model_path)

compiled_model_tf = core.compile_model(model=model_tf, device_name=device.value)

ov.save_model(model_tf, output_model="model/exported_tf_model.xml")

For more information, including examples in C++, C, and CLI, please refer to Converting a TensorFlow Model article in OpenVINO documentation.

Converting ONNX models#

ONNX (Open Neural Network Exchange) is an open format built to represent machine learning models. ONNX defines a common set of operators - the building blocks of machine learning and deep learning models - and a common file format to enable AI developers to use models with a variety of frameworks, tools, runtimes, and compilers. OpenVINO supports reading models in ONNX format directly. That means they can be used with OpenVINO Runtime without any prior conversion.

To convert an ONNX model, run model conversion with the path to the input model .onnx file:

import openvino as ov

ov.convert_model('your_model_file.onnx')

For more information, including CLI approach, please refer to Converting an ONNX Model article in OpenVINO documentation.

Converting PaddlePaddle models#

PaddlePaddle (PArallel Distributed Deep LEarning) is a simple, efficient and extensible deep learning framework, open-sourced to professional communities since 2016. It is an industrial platform with advanced technologies and rich features that cover core deep learning frameworks, basic model libraries, end-to-end development kits, tools & components as well as service platforms.

To convert the model, use openvino.convert_model and specify the path to the input .pdmodel model file:

import openvino as ov

ov.convert_model('your_model_file.pdmodel')

For more information, including CLI options, please refer to Converting a PaddlePaddle Model article in OpenVINO documentation and in paddle-to-openvino notebook in GitHub.

Conversion from TAO#

NVIDIA TAO Toolkit (short for Train, Adapt, Optimize) is a low-code AI model development framework that helps to build, fine-tune, and deploy deep learning models, without needing to write complex training code from scratch. It’s part of NVIDIA’s DeepStream and TensorRT ecosystem and is designed mainly for computer vision and speech AI tasks.

Common model formats used by TAO at various stages of model development:

Stage |

Format |

Description |

|---|---|---|

Training |

|

TAO internal model checkpoint |

Export |

|

Encrypted TensorRT-ready model |

Deployment |

|

TensorRT optimized inference engine |

Optional |

|

Open format for interoperability (optional export) |

There is no option to directly convert .etlt → .onnx, because .etlt (Encrypted TAO model) is a proprietary, encrypted format meant for TensorRT deployment only.

Option 1: Export ONNX directly from TAO Toolkit#

When using the tao export command, include the –onnx flag:

tao export <task> --onnx_file output_model.onnx

This is supported for most networks, though some — especially NVIDIA’s proprietary backbones — may not allow ONNX export.

Option 2: Use TensorRT Engine Conversion (Not Recommended for ONNX)#

.etlt → TensorRT engine (.engine) is possible using tao-converter, but not to ONNX:

tao-converter model.etlt -k <encryption_key> -o <output_layers> -d <input_dims> -t fp16 -e model.engine

Once you have an .engine, it’s optimized binary for inference, not convertible back to ONNX.